„Free, Reliable, Ethical and Efficient“

„Frei, Robust, Ethisch und Innovativ”

„Libre, Inagotable, Bravo, Racional y Encantado“

Articles connected to Free Software (mostly as defined by the GNU Project [1]). This is more technical than Politics and Free Licensing [2], though there is some overlap.

Also see my lists of articles about specific free software projects:

There is also a German Version to this Page: Freie Software [6]. Most articles are not translated, so the content on the german page and on the english page is very different.

New version: draketo.de/software/wisp [7]

» I love the syntax of Python, but crave the simplicity and power of Lisp.«

display "Hello World!" ↦ (display "Hello World!")

define : factorial n (define (factorial n) if : zero? n ↦ (if (zero? n) . 1 1 * n : factorial {n - 1} (* n (factorial {n - 1}))))

hg clone https://hg.sr.ht/~arnebab/wisp [9]

guix install guile guile-wisp./configure; make install from the releases.»ArneBab's alternate sexp syntax is best I've seen; pythonesque, hides parens but keeps power« — Christopher Webber in twitter [14], in identi.ca [15] and in his blog: Wisp: Lisp, minus the parentheses [16]

♡ wow ♡

»Wisp allows people to see code how Lispers perceive it. Its structure becomes apparent.« — Ricardo Wurmus in IRC, paraphrasing the wisp statement from his talk at FOSDEM 2019 about Guix for reproducible science in HPC [17].

☺ Yay! ☺

with (open-file "with.w" "r") as port format #t "~a\n" : read portFamiliar with-statement in 25 lines [18].

Update (2020-09-15): Wisp 1.0.3 [19] provides a

wispbinary to start a wisp repl or run wisp files, builds with Guile 3, and moved to sourcehut for libre hosting: hg.sr.ht/~arnebab/wisp [9].

After installation, just runwispto enter a wisp-shell (REPL).

This release also ships wisp-mode 0.2.6 (fewer autoloads), ob-wisp 0.1 (initial support for org-babel), and additional examples. New auxiliary projects include wispserve [20] for experiments with streaming and download-mesh via Guile and wisp in conf [21]:conf new -l wisp PROJNAMEcreates an autotools project with wisp whileconf new -l wisp-enter PROJAMEcreates a project with natural script writing [22] and guile doctests [23] set up. Both also install a script to run your project with minimal start time: I see 25ms to 130ms for hello world (36ms on average). The name of the script is the name of your project.

For more info about Wisp 1.0.3, see the NEWS file [24].

To test wisp v1.0.3, install Guile 2.0.11 or later [25] and bootstrap wisp:wget https://www.draketo.de/files/wisp-1.0.3.tar_.gz;

tar xf wisp-1.0.3.tar_.gz ; cd wisp-1.0.3/;

./configure; make check;

examples/newbase60.w 123If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.

If you have additional questions, see the Frequently asked Questions (FAQ) and chat in #guile at freenode [27].

That’s it - have fun with wisp syntax [28]!

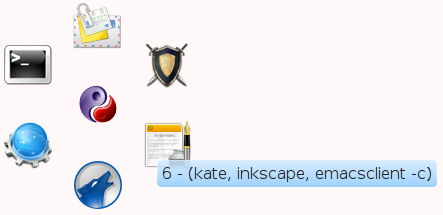

Update (2019-07-16): wisp-mode 0.2.5 [29] now provides proper indentation support in Emacs: Tab increases indentation and cycles back to zero. Shift-tab decreases indentation via previously defined indentation levels. Return preserves the indentation level (hit tab twice to go to zero indentation).

Update (2019-06-16): In c programming the uncommon way [30], specifically c-indent [31], tantalum is experimenting with combining wisp and sph-sc [32], which compiles scheme-like s-expressions to c. The result is a program written like this:pre-include "stdio.h" define (main argc argv) : int int char** declare i int printf "the number of arguments is %d\n" argc for : (set i 0) (< i argc) (set+ i 1) printf "arg %d is %s\n" (+ i 1) (array-get argv i) return 0 ;; code-snippet under GPLv3+To me that looks so close to C that it took me a moment to realize that it isn’t just using a parser which allows omitting some special syntax of C, but actually an implementation of a C-generator in Scheme (similar in spirit to cython, which generates C from Python), which results in code that looks like a more regular version of C without superfluous parens. Wisp really completes the round-trip from C over Scheme to something that looks like C but has all the regularity of Scheme, because all things considered, the code example is regular wisp-code. And it is awesome to see tantalum take up the tool I created and use it to experiment with ways to program that I never even imagined! ♡

TLDR: tantalum uses wisp [31] for code that looks like C and compiles to C but has the regularity of Scheme!

Update (2019-06-02): The repository at https://www.draketo.de/proj/wisp/ [33] is stale at the moment, because the staticsite extension [34] I use to update it was broken by API changes and I currently don’t have the time to fix it. Therefore until I get it fixed, the canonical repository for wisp is https://bitbucket.org/ArneBab/wisp/ [35]. I’m sorry for that. I would prefer to self-host it again, but the time to read up what i have to adjust blocks that right now (typically the actual fix only needs a few lines). A pull-request which fixes the staticsite extension [36] for modern Mercurial would be much appreciated!

Update (2019-02-08): wisp v1.0 [37] released as announced at FOSDEM [38]. Wisp the language is complete:display "Hello World!"

↦ (display "Hello World!")

And it achieves its goal:

“Wisp allows people to see code how Lispers perceive it. Its structure becomes apparent.” — Ricardo Wurmus at FOSDEM

Tooling, documentation, and porting of wisp are still work in progress, but before I go on, I want thank the people from the readable lisp project [39]. Without our initial shared path, and without their encouragement, wisp would not be here today. Thank you! You’re awesome!

With this release it is time to put wisp to use. To start your own project, see the tutorial Starting a wisp project [40] and the wisp tutorial [41]. For more info, see the NEWS file [42]. To test wisp v1.0, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-1.0.tar.gz;

tar xf wisp-1.0.tar.gz ; cd wisp-1.0/;

./configure; make check;

examples/newbase60.w 123

If you have additional questions, see the Frequently asked Questions (FAQ) and chat in #guile at freenode [27].

That’s it - have fun with wisp syntax [43]!

Update (2019-01-27): wisp v0.9.9.1 [44] released which includes the emacs support files missed in v0.9.9, but excludes unnecessary files which increased the release size from 500k to 9 MiB (it's now back at about 500k). To start your own wisp-project, see the tutorial Starting a wisp project [40] and the wisp tutorial [41]. For more info, see the NEWS file [45]. To test wisp v0.9.9.1, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.9.1.tar.gz;

tar xf wisp-0.9.9.1.tar.gz ; cd wisp-0.9.9.1/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2019-01-22): wisp v0.9.9 [47] released with support for literal arrays in Guile (needed for doctests), example start times below 100ms, ob-wisp.el for emacs org-mode babel and work on examples: network, securepassword, and downloadmesh. To start your own wisp-project, see the tutorial Starting a wisp project [40] and the wisp tutorial [41]. For more info, see the NEWS file [48]. To test wisp v0.9.9, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.9.tar.gz;

tar xf wisp-0.9.9.tar.gz ; cd wisp-0.9.9/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2018-06-26): There is now a wisp tutorial [41] for beginning programmers: “In this tutorial you will learn to write programs with wisp. It requires no prior knowledge of programming.” — Learn to program with Wisp [49], published in With Guise and Guile [50]

Update (2017-11-10): wisp v0.9.8 [51] released with installation fixes (thanks to benq!). To start your own wisp-project, see the tutorial Starting a wisp project [40]. For more info, see the NEWS file [52]. To test wisp v0.9.8, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.8.tar.gz;

tar xf wisp-0.9.8.tar.gz ; cd wisp-0.9.8/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2017-10-17): wisp v0.9.7 [53] released with bugfixes. To start your own wisp-project, see the tutorial Starting a wisp project [40]. For more info, see the NEWS file [54]. To test wisp v0.9.7, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.7.tar.gz;

tar xf wisp-0.9.7.tar.gz ; cd wisp-0.9.7/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2017-10-08): wisp v0.9.6 [55] released with compatibility for tests on OSX and old autotools, installation toguile/site/(guile version)/language/wispfor cleaner installation, debugging and warning when using not yet defined lower indentation levels, and withwisp-scheme.scmmoved tolanguage/wisp.scm. This allows creating a wisp project by simply copyinglanguage/. A short tutorial for creating a wisp project is available at Starting a wisp project [40] as part of With Guise and Guile [56]. For more info, see the NEWS file [57]. To test wisp v0.9.6, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.6.tar.gz;

tar xf wisp-0.9.6.tar.gz ; cd wisp-0.9.6/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2017-08-19): Thanks to tantalum, wisp is now available as package for Arch Linux [58]: from the Arch User Repository (AUR) as guile-wisp-hg [11]! Instructions for installing the package are provided on the AUR page in the Arch Linux wiki [59]. Thank you, tantalum!

Update (2017-08-20): wisp v0.9.2 [60] released with many additional examples including the proof-of-concept for a minimum ceremony dialog-based game duel.w [61] and the datatype benchmarks in benchmark.w [62]. For more info, see the NEWS file [63]. To test it, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.2.tar.gz;

tar xf wisp-0.9.2.tar.gz ; cd wisp-0.9.2/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2017-03-18): I removed the link to Gozala’s wisp, because it was put in maintenance mode. Quite the opposite of Guile which is taking up speed and just released Guile version 2.2.0 [64], fully compatible with wisp (though wisp helped to find and fix one compiler bug [65], which is something I’m really happy about ☺).

Update (2017-02-05): Allan C. Webber presented my talk Natural script writing with Guile [66] in the Guile devroom [67] at FOSDEM. The talk was awesome — and recorded! Enjoy Natural script writing with Guile by "pretend Arne" ☺

presentation (pdf, 16 slides) [69] and its source (org) [70].

Have fun with wisp syntax [46]!

Update (2016-07-12): wisp v0.9.1 [71] released with a fix for multiline strings and many additional examples. For more info, see the NEWS file [72]. To test it, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.1.tar.gz;

tar xf wisp-0.9.1.tar.gz ; cd wisp-0.9.1/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2016-01-30): I presented Wisp [73] in the Guile devroom [74] at FOSDEM. The reception was unexpectedly positive — given some of the backlash the readable project [39] got I expected an exceptionally sceptical audience, but people rather asked about ways to put Wisp to good use, for example in templates, whether it works in the REPL (yes, it does) and whether it could help people start into Scheme.The atmosphere in the Guile devroom was very constructive and friendly during all talks, and I’m happy I could meet the Hackers there in person. I’m definitely taking good memories with me. Sadly the video did not make it, but the schedule-page [73] includes the presentation (pdf, 10 slides) [81] and its source (org) [82].Wisp is ”The power and simplicity of #lisp [75] with the familiar syntax of #python [76]” talk by @ArneBab [77] #fosdem [78] pic.twitter.com/TaGhIGruIU [79]

— Jan Nieuwenhuizen (@JANieuwenhuizen) January 30, 2016 [80]

Have fun with wisp syntax [46]!

Update (2016-01-04): Wisp is available in GNU Guix [83]! Thanks to the package [84] from Christopher Webber you can try Wisp easily on top of any distribution:

This already gives you Wisp at the REPL (take care to follow all instructions for installing Guix on top of another distro, especially the locales).guix package -i guile guile-wisp

guile --language=wisp

Have fun with wisp syntax [46]!

Update (2015-10-01): wisp v0.9.0 [85] released which no longer depends on Python for bootstrapping releases (but ./configure still asks for it — a fix for another day). And thanks to Christopher Webber there is now a patch [86] to install wisp within GNU Guix [83]. For more info, see the NEWS file [72]. To test it, install Guile 2.0.11 or later [25] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.9.0.tar.gz;

tar xf wisp-0.9.0.tar.gz ; cd wisp-0.9.0/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]!

Update (2015-09-12): wisp v0.8.6 [87] released with fixed macros in interpreted code, chunking by top-level forms,: .parsed as nothing, ending chunks with a trailing period, updated example evolve [88] and added examples newbase60 [89], cli [90], cholesky decomposition [91], closure [92] and hoist in loop [93]. For more info, see the NEWS file [72].To test it, install Guile 2.0.x or 2.2.x [25] and Python 3 [94] and bootstrap wisp:

If it prints 23 (123 in NewBase60 [26]), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.8.6.tar.gz;

tar xf wisp-0.8.6.tar.gz ; cd wisp-0.8.6/;

./configure; make check;

examples/newbase60.w 123

That’s it - have fun with wisp syntax [46]! And a happy time together for the ones who merge their paths today ☺

Update (2015-04-10): wisp v0.8.3 [95] released with line information in backtraces. For more info, see the NEWS file [72].To test it, install Guile 2.0.x or 2.2.x [25] and Python 3 [94] and bootstrap wisp:

If it prints 120120 (two times 120, the factorial of 5), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.8.3.tar.gz;

tar xf wisp-0.8.3.tar.gz ; cd wisp-0.8.3/;

./configure; make check;

guile -L . --language=wisp tests/factorial.w; echo

That’s it - have fun with wisp syntax [46]!

Update (2015-03-18): wisp v0.8.2 [96] released with reader bugfixes, new examples [97] and an updated draft for SRFI 119 (wisp) [98]. For more info, see the NEWS file [72].To test it, install Guile 2.0.x or 2.2.x [25] and Python 3 [94] and bootstrap wisp:

If it prints 120120 (two times 120, the factorial of 5), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.8.2.tar.gz;

tar xf wisp-0.8.2.tar.gz ; cd wisp-0.8.2/;

./configure; make check;

guile -L . --language=wisp tests/factorial.w; echo

That’s it - have fun with wisp syntax [46]!

Update (2015-02-03): The wisp SRFI just got into draft state: SRFI-119 [98] — on its way to an official Scheme Request For Implementation!

Update (2014-11-19): wisp v0.8.1 [99] released with reader bugfixes. To test it, install Guile 2.0.x [25] and Python 3 [94] and bootstrap wisp:

If it prints 120120 (two times 120, the factorial of 5), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.8.1.tar.gz;

tar xf wisp-0.8.1.tar.gz ; cd wisp-0.8.1/;

./configure; make check;

guile -L . --language=wisp tests/factorial.w; echo

That’s it - have fun with wisp syntax [46]!

Update (2014-11-06): wisp v0.8.0 [100] released! The new parser now passes the testsuite and wisp files can be executed directly. For more details, see the NEWS [101] file. To test it, install Guile 2.0.x [25] and bootstrap wisp:

If it prints 120120 (two times 120, the factorial of 5), your wisp is fully operational.wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.8.0.tar.gz;

tar xf wisp-0.8.0.tar.gz ; cd wisp-0.8.0/;

./configure; make check;

guile -L . --language=wisp tests/factorial.w;

echo

That’s it - have fun with wisp syntax [46]!

On a personal note: It’s mindboggling that I could get this far! This is actually a fully bootstrapped indentation sensitive programming language with all the power of Scheme [102] underneath, and it’s a one-person when-my-wife-and-children-sleep sideproject. The extensibility of Guile [25] is awesome!

Update (2014-10-17): wisp v0.6.6 [103] has a new implementation of the parser which now uses the scheme read function. `wisp-scheme.w` parses directly to a scheme syntax-tree instead of a scheme file to be more suitable to an SRFI. For more details, see the NEWS [104] file. To test it, install Guile 2.0.x [25] and bootstrap wisp:

That’s it - have fun with wisp syntax [46] at the REPL!wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.6.6.tar.gz;

tar xf wisp-0.6.6.tar.gz; cd wisp-0.6.6;

./configure; make;

guile -L . --language=wisp

Caveat: It does not support the ' prefix yet (syntax point 4).

Update (2014-01-04): Resolved the name-clash together with Steve Purcell und Kris Jenkins: the javascript wisp-mode was renamed to wispjs-mode [105] and wisp.el is called wisp-mode 0.1.5 [106] again. It provides syntax highlighting for Emacs and minimal indentation support via tab. You can install it with `M-x package-install wisp-mode`

Update (2014-01-03): wisp-mode.el was renamed to wisp 0.1.4 [107] to avoid a name clash with wisp-mode for the javascript-based wisp.

Update (2013-09-13): Wisp now has a REPL! Thanks go to GNU Guile [25] and especially Mark Weaver, who guided me through the process (along with nalaginrut who answered my first clueless questions…).

To test the REPL, get the current code snapshot [108], unpack it, run./bootstrap.sh, start guile with$ guile -L .(requires guile 2.x) and enter,language wisp.

Example usage:then hit enter thrice.display "Hello World!\n"

Voilà, you have wisp at the REPL!

Caveeat: the wisp-parser is still experimental and contains known bugs. Use it for testing, but please do not rely on it for important stuff, yet.

Update (2013-09-10): wisp-guile.w can now parse itself [109]! Bootstrapping: The magical feeling of seeing a language (dialect) grow up to live by itself:python3 wisp.py wisp-guile.w > 1 && guile 1 wisp-guile.w > 2 && guile 2 wisp-guile.w > 3 && diff 2 3. Starting today, wisp is implemented in wisp.

Update (2013-08-08): Wisp 0.3.1 [110] released (Changelog [111]).

Wisp is a simple preprocessor which turns indentation sensitive syntax into Lisp syntax.

The basic goal is to create the simplest possible indentation based syntax which is able to express all possibilities of Lisp.

Basically it works by inferring the parentheses of lisp by reading the indentation of lines.

It is related to SRFI-49 [112] and the readable Lisp S-expressions Project [39] (and actually inspired by the latter), but it tries to Keep it Simple and Stupid: wisp is a simple preprocessor which can be called by any lisp implementation to add support for indentation sensitive syntax. To repeat the initial quote:

I love the syntax of Python, but crave the simplicity and power of Lisp.

With wisp I hope to make it possible to create lisp code which is easily readable for non-programmers (and me!) and at the same time keeps the simplicity and power of Lisp.

Its main technical improvement over SRFI-49 and Project Readable is using lines prefixed by a dot (". ") to mark the continuation of the parameters of a function after intermediate function calls.

The dot-syntax means, instead of marking every function call, it marks every line which does not begin with a function call - which is the much less common case in lisp-code.

See the Updates for information how to get the current version of wisp.

Enter in Enter three witches [113].

display "Hello World!" ↦ (display "Hello World!")

display ↦ (display string-append "Hello " "World!" ↦ (string-append "Hello " "World!"))

display ↦ (display string-append "Hello " "World!" ↦ (string-append "Hello " "World!")) display "Hello Again!" ↦ (display "Hello Again!")

' "Hello World!" ↦ '("Hello World!")

string-append "Hello" ↦ (string-append "Hello" string-append " " "World" ↦ (string-append " " "World") . "!" ↦ "!")

let ↦ (let : ↦ ((msg "Hello World!")) msg "Hello World!" ↦ (display msg)) display msg ↦

define : hello who ↦ (define (hello who) display ↦ (display string-append "Hello " who "!" ↦ (string-append "Hello " who "!")))

define : hello who ↦ (define (hello who) _ display ↦ (display ___ string-append "Hello " who "!" ↦ (string-append "Hello " who "!")))

To make that easier to understand, let’s just look at the examples in more detail:

display "Hello World!" ↦ (display "Hello World!")

This one is easy: Just add a bracket before and after the content.

display "Hello World!" ↦ (display "Hello World!") display "Hello Again!" ↦ (display "Hello Again!")

Multiple lines with the same indentation are separate function calls (except if one of them starts with ". ", see Continue arguments, shown in a few lines).

display ↦ (display string-append "Hello " "World!" ↦ (string-append "Hello " "World!"))

If a line is more indented than a previous line, it is a sibling to the previous function: The brackets of the previous function gets closed after the (last) sibling line.

By using a . followed by a space as the first non-whitespace character on a line, you can mark it as continuation of the previous less-indented line. Then it is no function call but continues the list of parameters of the funtcion.

I use a very synthetic example here to avoid introducing additional unrelated concepts.

string-append "Hello" ↦ (string-append "Hello" string-append " " "World" ↦ (string-append " " "World") . "!" ↦ "!")

As you can see, the final "!" is not treated as a function call but as parameter to the first string-append.

This syntax extends the notion of the dot as identity function. In many lisp implementations1 we already have `(= a (. a))`.

= a ↦ (= a . a ↦ (. a))

With wisp, we extend that equality to `(= '(a b c) '((. a b c)))`.

. a b c ↦ a b c

If you use `let`, you often need double brackets. Since using pure indentation in empty lines would be really error-prone, we need a way to mark a line as indentation level.

To add multiple brackets, we use a colon to mark an intermediate line as additional indentation level.

let ↦ (let : ↦ ((msg "Hello World!")) msg "Hello World!" ↦ (display msg)) display msg ↦

Since we already use the colon as syntax element, we can make it possible to use it everywhere to open a bracket - even within a line containing other code. Since wide unicode characters would make it hard to find the indentation of that colon, such an inline-function call always ends at the end of the line. Practically that means, the opened bracket of an inline colon always gets closed at the end of the line.

define : hello who ↦ (define (hello who) display : string-append "Hello " who "!" ↦ (display (string-append "Hello " who "!")))

This also allows using inline-let:

let ↦ (let : msg "Hello World!" ↦ ((msg "Hello World!")) display msg ↦ (display msg))

and can be stacked for more compact code:

let : : msg "Hello World!" ↦ (let ((msg "Hello World!")) display msg ↦ (display msg))

To make the indentation visible in non-whitespace-preserving environments like badly written html, you can replace any number of consecutive initial spaces by underscores, as long as at least one whitespace is left between the underscores and any following character. You can escape initial underscores by prefixing the first one with \ ("\___ a" → "(___ a)"), if you have to use them as function names.

define : hello who ↦ (define (hello who) _ display ↦ (display ___ string-append "Hello " who "!" ↦ (string-append "Hello " who "!")))

I do not like adding any unnecessary syntax element to lisp. So I want to show explicitely why the syntax elements are required to meet the goal of wisp: indentation-based lisp with a simple preprocessor.

We have to be able to continue the arguments of a function after a call to a function, and we must be able to split the arguments over multiple lines. That’s what the leading dot allows. Also the dot at the beginning of the line as marker of the continuation of a variable list is a generalization of using the dot as identity function - which is an implementation detail in many lisps.

`(. a)` is just `a`.

So for the single variable case, this would not even need additional parsing: wisp could just parse ". a" to "(. a)" and produce the correct result in most lisps. But forcing programmers to always use separate lines for each parameter would be very inconvenient, so the definition of the dot at the beginning of the line is extended to mean “take every element in this line as parameter to the parent function”.

Essentially this dot-rule means that we mark variables at the beginning of lines instead of marking function calls, since in Lisp variables at the beginning of a line are much rarer than in other programming languages. In Lisp, assigning a value to a variable is a function call while it is a syntax element in many other languages. What would be a variable at the beginning of a line in other languages is a function call in Lisp.

(Optimize for the common case, not for the rare case)

For double brackets and for some other cases we must have a way to mark indentation levels without any code. I chose the colon, because it is the most common non-alpha-numeric character in normal prose which is not already reserved as syntax by lisp when it is surrounded by whitespace, and because it already gets used for marking keyword arguments to functions in Emacs Lisp, so it does not add completely alien characters.

The function call via inline " : " is a limited generalization of using the colon to mark an indentation level: If we add a syntax-element, we should use it as widely as possible to justify the added syntax overhead.

But if you need to use : as variable or function name, you can still do that by escaping it with a backslash (example: "\:"), so this does not forbid using the character.

In Python the whitespace hostile html already presents problems with sharing code - for example in email list archives and forums. But in Python the indentation can mostly be inferred by looking at the previous line: If that ends with a colon, the next line must be more indented (there is nothing to clearly mark reduced indentation, though). In wisp we do not have this help, so we need a way to survive in that hostile environment.

The underscore is commonly used to denote a space in URLs, where spaces are inconvenient, but it is rarely used in lisp (where the dash ("-") is mostly used instead), so it seems like a a natural choice.

You can still use underscores anywhere but at the beginning of the line. If you want to use it at the beginning of the line you can simply escape it by prefixing the first underscore with a backslash (example: "\___").

A few months ago I found the readable Lisp project [39] which aims at producing indentation based lisp, and I was thrilled. I had already done a small experiment with an indentation to lisp parser, but I was more than willing to throw out my crappy code for the well-integrated parser they had.

Fast forward half a year. It’s February 2013 and I started reading the readable list again after being out of touch for a few months because the birth of my daughter left little time for side-projects. And I was shocked to see that the readable folks had piled lots of additional syntax elements on their beautiful core model, which for me destroyed the simplicity and beauty of lisp. When language programmers add syntax using \\, $ and <>, you can be sure that it is no simple lisp anymore. To me readability does not just mean beautiful code, but rather easy to understand code with simple concepts which are used consistently. I prefer having some ugly corner cases to adding more syntax which makes the whole language more complex.

I told them about that [114] and proposed a simpler structure which achieved almost the same as their complex structure. To my horror they proposed adding my proposal to readable, making it even more bloated (in my opinion). We discussed a long time - the current syntax for inline-colons is a direct result of that discussion in the readable list - then Alan wrote me a nice mail [115], explaining that readable will keep its direction. He finished with «We hope you continue to work with or on indentation-based syntaxes for Lisp, whether sweet-expressions, your current proposal, or some other future notation you can develop.»

It took me about a month to answer him, but the thought never left my mind (@Alan: See what you did? You anchored the thought of indentation based lisp even deeper in my mind. As if I did not already have too many side-projects… :)).

Then I had finished the first version of a simple whitespace-to-lisp preprocessor.

And today I added support for reading indentation based lisp from standard input which allows actually using it as in-process preprocessor without needing temporary files, so I think it is time for a real release outside my Mercurial repository [116].

So: Have fun with wisp v0.2 (tarball) [117]!

PS: Wisp is linked in the comparisions of SRFI-110 [118].

| Anhang | Größe |

|---|---|

| wisp-1.0.3.tar_.gz [19] | 756.71 KB |

Update: The recording is now online at ftp.fau.de/fosdem/2017/K.4.601/naturalscriptwritingguile.vp8.webm [119]

Here’s the stream to the Guile [25] devroom at #FOSDEM: https://live.fosdem.org/watch/k4601 [120]

Schedule (also on the FOSDEM page [67]):

Every one of these talks sounds awesome! Here’s where we get deep.

Update 2020: In Dryads Wake [121] I am starting a game using the way presented here to write dialogue-focused games with minimal ceremony. Demo: https://dryads-wake.1w6.org [122]

Update 2018: Bitbucket is dead to me. You can find the source at https://hg.sr.ht/~arnebab/ews [123]

Update 2017: A matured version of the work shown here was presented at FOSDEM 2017 as Natural script writing with Guile [66]. There is also a video of the presentation [119] (held by Chris Allan Webber; more info… [124]). Happy Hacking!

Programming languages allow expressing ideas in non-ambiguous ways. Let’s do a play.

say Yes, I do!

Yes, I do!

This is a sketch of applying Wisp [125] to a pet issue of mine: Writing the story of games with minimal syntax overhead, but still using a full-fledged programming language. My previous try was the TextRPG [126], using Python. It was fully usable. This experiment drafts a solution to show how much more is possible with Guile Scheme using Wisp syntax (also known an SRFI-119 [98]).

To follow the code here, you need Guile 2.0.11 [25] on a GNU Linux system. Then you can install Wisp and start a REPL with

wget https://bitbucket.org/ArneBab/wisp/downloads/wisp-0.8.6.tar.gz

tar xf wi*z; cd wi*/; ./c*e; make check; guile -L . --language=wisp

For finding minimal syntax, the first thing to do is to look at how such a structure would be written for humans. Let’s take the obvious and use Shakespeare: Macbeth, Act 1, Scene 1 [127] (also it’s public domain, so we avoid all copyright issues). Note that in the original, the second and last non-empty line are shown as italic.

SCENE I. A desert place.

Thunder and lightning. Enter three Witches

First Witch

When shall we three meet again

In thunder, lightning, or in rain?

Second Witch

When the hurlyburly's done,

When the battle's lost and won.

Third Witch

That will be ere the set of sun.

First Witch

Where the place?

Second Witch

Upon the heath.

Third Witch

There to meet with Macbeth.

First Witch

I come, Graymalkin!

Second Witch

Paddock calls.

Third Witch

Anon.

ALL

Fair is foul, and foul is fair:

Hover through the fog and filthy air.

Exeunt

Let’s analyze this: A scene header, a scene description with a list of people, then the simple format

person

something said

and something more

For this draft, it should suffice to reproduce this format with a full fledged programming language.

This is how our code should look:

First Witch

When shall we three meet again

In thunder, lightning, or in rain?

As a first step, let’s see how code which simply prints this would look in plain Wisp. The simplest way would just use a multiline string:

display "First Witch When shall we three meet again In thunder, lightning, or in rain?\n"

That works, but it’s not really nice. For one thing, the program does not have any of the semantic information a human would have, so if we wanted to show the First Witch in a different color than the Second Witch, we’d already be lost. Also throwing everything in a string might work, but when we need highlighting of certain parts, it gets ugly: We actually have to do string parsing by hand.

But this is Scheme, so there’s a better way. We can go as far as writing the sentences plainly, if we add a macro which grabs the variable names for us. We can do a simple form of this in just six short lines:

define-syntax-rule : First_Witch a ... format #t "~A\n" string-join map : lambda (x) (string-join (map symbol->string x)) quote : a ... . "\n"

This already gives us the following syntax:

First_Witch When shall we three meet again In thunder, lightning, or in rain?

which prints

When shall we three meet again

In thunder, lightning, or in rain?

Note that :, . and , are only special when they are preceded by

whitespace or are the first elements on a line, so we can freely use

them here.

To polish the code, we could get rid of the underscore by treating

everything on the first line as part of the character (indented lines

are sublists of the main list, so a recursive syntax-case [128] macro can

distinguish them easily), and we could add highlighting with

comma-prefixed parens (via standard Scheme preprocessing these get

transformed into (unquote (...))). Finally we could add a macro for

the scene, which creates these specialized parsers for all persons.

A completed parser could then read input files like the following:

SCENE I. A desert place. Thunder and lightning. Enter : First Witch Second Witch Third Witch First Witch When shall ,(emphasized we three) meet again In thunder, lightning, or in rain? Second Witch When the hurlyburly's done, When the battle's lost and won. ; ... ALL Fair is foul, and foul is fair: Hover through the fog and filthy air. action Exeunt

And with that the solution is sketched. I hope it was interesting for you to see how easy it is to create this!

Note also that this is not just a specialized text-parser. It provides access to all of Guile Scheme, so if you need interactivity or something like the branching story [129] from TextRPG, scene writers can easily add it without requiring help from the core system. That’s part of the Freedom for Developers from the language implementors which is at the core of GNU Guile [25].

Don’t use this as data interchange format for things downloaded from the web, though: It does give access to a full Turing complete language. That’s part of its power which allows you to realize a simple syntax without having to implementent all kinds of specialized features which are needed for only one or two scenes. If you want to exchange the stories, better create a restricted interchange-format which can be exported from scenes written in the general format. Use lossy serializiation to protect your users.

And that’s all I wanted to say ☺

Happy Hacking!

PS: For another use of Shakespeare in programming languages, see the Shakespeare programming language [130]. Where this article uses Wisp [131] as a very low ceremony language to represent very high level concepts, the Shapespeare programming language takes the opposite approach by providing an extremely high-ceremony language for very low-level concepts. Thanks to ZMeson [132] for reminding me ☺

| Anhang | Größe |

|---|---|

| 2015-09-12-Sa-Guile-scheme-wisp-for-low-ceremony-languages.org [133] | 6.35 KB |

| enter-three-witches.w [134] | 1.23 KB |

Python is the first language I loved. I dreamt in Python, I planned in Python, I thought I would never need anything else.

- Free: html [136] | pdf [137]

- Softcover: 14.95 € [135]

with pdf, epub, mobi

- Source: download [138]

free licensed under GPL

Update 2025: I published the libre book Naming & Logic: programming essentials with Scheme [139], a quick start into Guile Scheme. It gets you from your first

defineto deploying your application, either as a server, or as web assembly, or as binaries. Thanks to the minimalism of Scheme, it just needs 64 DinA6 pages while teaching the best practices I found in a decade of using Guile Scheme.

Python is a language where I can teach a handful of APIs and cause people to learn most of the language as a whole. — Raymond Hettinger (2011-06-20) [140]

Why, I feel all thin, sort of stretched if you know what I mean: like butter that has been scraped over too much bread. — Bilbo Baggins in “The Lord of the Rings”

You must unlearn what you have learned. — Yoda in “The Empire Strikes Back“

Guile Scheme [25] is the official GNU extension language, used for example in GNU Cash [141] and GNU Guix [83] and the awesome Lilypond [142].

Every sufficiently complex application/language/tool will either have to use Lisp or reinvent it the hard way. — Greenspuns 10th rule [143]

As free cultural work [144], py2guile is licensed under the GPLv3 or later [145]. You are free to share, change, remix and even to resell it as long as you say that it’s from me (attribution) and provide the whole corresponding source under the GPL (sharealike).

For instructions on building the ebook yourself, see the README in the source.

Happy Hacking!

— Arne Babenhauserheide

py2guile [146] is a book I wrote about Python and Guile Scheme. It’s selling at 14.95 € [147] for the printed softcover.

To fight the new german data retention laws, you can get the ebook gratis: Just install Freenet [148], then the following links work:

Escape total surveillance and get an ebook about the official GNU extension language for free today!

Python is the first language I loved. I dreamt in Python, I planned in Python, I thought I would never need anything else.

Download “Python to Guile” (pdf) [137]

You can read more about this on the Mercurial mailing list [153].

- Free: html [136] | pdf [137]

preview edition

(complete)

Yes, this means that with Guile I will contribute to a language developed via Git, but it won’t be using a proprietary platform.

If you like py2guile, please consider buying the book:

- Softcover: 14.95 € [147]

with digital companion

- Source: download [138]

free licensed under GPL

More information: draketo.de/py2guile [154]

I was curious why this happened so I read through PEP 0481. It's interesting that Git was chosen to replace Mercurial due to Git's greater popularity, yet a technical comparison was deemed as subjective. In fact, no actual comparison (of any kind) was discussed. What a shame. — Emmanuel Rosa on G+ [155]

yes. And the popularity contest wasn’t done in any robust way — they present values between 3x as popular and 18x as popular. That is a crazy margin of error — especially for a value on which to base a very disrupting decision. — my answer

Yesterday Python maintainers chose to move to GitHub and Git. Python is now developed using a C-based tool on a Ruby-based, unfree platform. And that changed my view on what’s happening in the community. Python no longer fosters its children and it even stopped dogfooding where its tools are as good as or better than other tools. I don’t think it will die. But I don’t bet on it for the future anymore. — EDIT to my answer on Quora [156] “is Python a dying language?” which originally stated “it’s not dying, it’s maturing”.

The PEP for github hedges somewhat by using github for code but not bug tracker. Not ideal considering BitKeeper, but a full on coup for GitHub. — Martin Owens

that’s something like basic self-defense, but my experience with projects who moved to GitHub is that GitHub soon starts to invade your workflows, changing your cooperation habits. At some point people realize that they can’t work well without GitHub anymore.

Not becoming dependent on GitHub while using it requires constant vigilance. Seeing how Python already switched to Git and GitHub because existing infrastructure wasn’t maintained does not sound like they will be able or willing to put in the work to keep independent. — my answer on G+ [157]

I was already pretty disappointed when I heard that Python is moving to Git. Seeing it choose the proprietary platform is an even sadder choice from my perspective. Two indicators for a breakage in the culture of the project.

For me that’s a reason to leave Python. Though it’s not like I did not get a foreboding of that. It’s why I started learning Guile Scheme in 2013 — and wrote about the experience.

I will still use Python for many practical tasks — it acquired the momentum for that, especially in science (I was a champion for Python in the institute, which is now replacing Matlab and IDL for many people here, and I will be teaching Python starting two weeks from now). I think it will stay strong for many years; a good language to start and a robust base for existing programs. But with what I learned the past years, Python is no longer where I place my bets. — slightly adjusted version of my post on the Mercurial mailing list [153].

(this is taken from a message I wrote to Brett, so I don’t have to say later that I stayed silent while Python went down. I got a nice answer, and despite the disagreement we said a friendly good bye)

Back when I saw that Python might move to git, I silently resigned and stopped caring to some degree. I have seen a few projects move to Git in the past years (and in every project problems remained even years after the switch), so when it came to cPython, the quarrel with git-fans just didn’t feel worthwhile anymore.

Seeing Python choose GitHub with the notion of “git is 3x to 18x more popular than Mercurial and free solutions aren’t better than GitHub” makes me lose my trust in the core development community, though.

PEP 481 states, that it is about the quality of the tooling, but it names the popularity numbers quite prominently: python.org/dev/peps/pep-0481/ [158]

If they are not relevant, they shouldn’t be included, but they are included, so they seem to be relevant to the decision. And “the best tooling” is mostly subjective, too — which is shown in the PEP itself which mostly talks about popularity, not quality. It even goes into length about how to avoid many of the features of GitHub.

I’ve seen quite a few projects try to avoid lock-in to GitHub. None succeeded. Not even in one where two of about six active developers were deeply annoyed by GitHub. This is exactly what the scipy part of the PEP describes: lock-in due to group effects.

Finally, using hg-git is by far not seamless. I use it for several projects, and when the repositories become big (as cPython’s is), the overhead of the conversion becomes a major hassle. It works, but native Mercurial would be much more efficient. When pushing takes minutes, you start to think twice about whether you’ll just do the quick fix right now. Not to forget that at some point people start to demand signing of commits in git-style (not possible with hg-git, you can only sign commits mercurial-style) as well as other gitologisms (which have an analogue in Mercurial but aren’t converted by hg-git).

Despite my disappointment, I wish you all the best. Python is a really cool language. It’s the first one I loved and will always stay dear to me, so I’m happy that you work on it — and I hope you keep it up.

So, I think this is goodbye. A bit melancholic, but that’s how that goes.

Good luck to you in your endeavors,

Arne Babenhauserheide

And that’s enough negativity from me.

Thank you, Brett, for reminding me that even though we might disagree, it’s important to remember that people in the project are hit by negativity much harder than it feels for the one who writes.

For my readers: If that also happened to you one time or the other, please read his article:

Thank you, Brett. Despite everything I wrote here, I still think that Python is a great project, and it got many things right — some of which are things which are at least as important as code but much less visible, like having a large, friendly community.

I’m happy that Python exists, and I hope that it keeps going. And where I use programming to make a living, I’m glad when I can do it in Python. Despite all my criticism, I consider Python as the best choice for many tasks, and this is also written in py2guile [146]: almost the the first half of the book talks about the strengths of Python. Essentially I could not criticize Python as strongly as I’m doing it here if I did not like it so much. Keep that in mind when you think about what you read.

Also Brett now published an article where he details his decision to move to GitHub. It is a good read: The history behind the decision to move Python to GitHub — Or, why it took over a year for me to make a decision [160]

It's often said, that Gentoo is all about choice, but that doesn't quite fit what it is for me.

After all, the highest ability to choose is Linux from scratch and I can have any amount of choice in every distribution by just going deep enough (and investing enough time).

What really distinguishes Gentoo for me is that it makes it convenient to choose.

Since we all have a limited time budget, many of us only have real freedom to choose, because we use Gentoo which makes it possible to choose with the distribution-tools. Therefore only calling it “choice” doesn't ring true in general - it misses the reason, why we can choose.

So what Gentoo gives me is not just choice, but convenient choice.

Some examples to illustrate the point:

I recently rebuilt my system after deciding to switch my disk layout (away from reiserfs towards a simple ext3 with reiser4 for the portage tree). When doing so I decided to try to use a "pure" KDE 4 - that means, a KDE 4 without any remains from KDE3 or qt3.

To use kde without any qt3 applications, I just had to put "-qt3" and "-qt3support" into my useflags in /etc/make.conf and "emerge -uDN world" (and solve any arising conflicts).

Imagine doing the same with a (K)Ubuntu...

Similarly to enable emacs support on my GentooXO (for all programs which can have emacs support), I just had to add the "emacs" useflag and "emerge -uDN world".

Just add

ACCEPT_LICENSE="-* @FSF-APPROVED @FSF-APPROVED-OTHER"

to your /etc/make.conf to make sure you only get software under licenses which are approved by the FSF.

For only free licenses (regardless of the approved state) you can use:

ACCEPT_LICENSE="-* @FREE"

All others get marked as masked by license. Default (no ACCEPT_LICENSE in /etc/make.conf) is “* -@EULA”: No unfree software. You can check your setting via emerge --info | grep ACCEPT_LICENSE. More information… [161]

Another part where choosing is made convenient in Gentoo are testing and unstable programs.

I remember my pain with a Kubuntu, where I wanted to use the most recent version of Amarok. I either had to add a dedicated Amarok-only testing repository (which I'd need for every single testing program), or I had to switch my whole system into testing. I did the latter and my graphical package manager ceased to work. Just imagine how quickly I ran back to Gentoo.

And then have a look at the ease of deciding to take one package into testing in Gentoo:

EDIT: Once I had a note here “It would be nice to be able to just add the missing dependencies with one call”. This is now possible with --autounmask-write.

And for some special parts (like KDE 4) I can easily say something like

(I don't have the kde-testing overlay on my GentooXO, where I write this post, so the exact command might vary slightly)

So to finish this post: For me, Gentoo is not only about choice. It is about convenient choice.

And that means: Gentoo gives everybody the power to choose.

I hope you enjoy it as I do!

Update 2016: I nowadays just use

emerge --sync; emerge @security

To keep my Gentoo up to date, I use daily and weekly update scripts which also always run revdep-rebuild after the saturday night update :)

My daily update is via pkgcore [163] to pull in all important security updates:

pmerge @glsa

That pulls in the Gentoo Linux Security Advisories - important updates with mostly short compile time. (You need pkgcore for that: "emerge pkgcore")

Also I use two cron scripts.

Note: It might be useful to add the lafilefixer to these scripts (source [164]).

The following is my daily update (in /etc/cron.daily/update_glsa_programs.cron )

\#! /bin/sh

\### Update the portage tree and the glsa packages via pkgcore

\# spew a status message

echo $(date) "start to update GLSA" >> /tmp/cron-update.log

\# Sync only portage

pmaint sync /usr/portage

\# security relevant programs

pmerge -uDN @glsa > /tmp/cron-update-pkgcore-last.log || cat \

/tmp/cron-update-pkgcore-last.log >> /tmp/cron-update.log

\# And keep everything working

revdep-rebuild

\# Finally update all configs which can be updated automatically

cfg-update -au

echo $(date) "finished updating GLSA" >> /tmp/cron-update.log

And here's my weekly cron - executed every saturday night (in /etc/cron.weekly/update_installed_programs.cron ):

\#!/bin/sh \### Update my computer using pgkcore, \### since that also works if some dependencies couldn't be resolved. \# Sync all overlays eix-sync \## First use pkgcore \# security relevant programs (with build-time dependencies (-B)) pmerge -BuD @glsa \# system, world and all the rest pmerge -BuD @system pmerge -BuD @world pmerge -BuD @installed \# Then use portage for packages pkgcore misses (inlcuding overlays) \# and for *EMERGE_DEFAULT_OPTS="--keep-going"* in make.conf emerge -uD @security emerge -uD @system emerge -uD @world emerge -uD @installed \# And keep everything working emerge @preserved-rebuild revdep-rebuild \# Finally update all configs which can be updated automatically cfg-update -au

For a long time it bugged me, that eix uses a seperate database which I need to keep up to date. But no longer: With pkgcore [163] as fast as it is today, I set up pquery to replace eix.

The result is pix:

alias pix='pquery --raw -nv --attr=keywords'

(put the above in your ~/.bashrc)

The output looks like this:

$ pix pkgcore

* sys-apps/pkgcore

versions: 0.5.11.6 0.5.11.7

installed: 0.5.11.7

repo: gentoo

description: pkgcore package manager

homepage: http://www.pkgcore.org [165]

keywords: ~alpha ~amd64 ~arm ~hppa ~ia64 ~ppc ~ppc64 ~s390 ~sh ~sparc ~x86

It’s still a bit slower than eix, but it operates directly on the portage tree and my overlays — and I no longer have to use eix-sync for syncing my overlays, just to make sure eix is updated.

Aside from pquery, pkgcore also offers pmerge to install packages (almost the same syntax as emerge) and pmaint for synchronizing and other maintenance stuff.

From my experience, pmerge is hellishly fast for simple installs like pmerge kde-misc/pyrad, but it sometimes breaks with world updates. In that case I just fall back on portage. Both are Python, so when you have one, adding the other is very cheap (spacewise).

Also pmerge has the nice pmerge @glsa feature: Get Gentoo Linux security updates. Due to it’s almost unreal speed (compared to portage) checking for security updates now doesn’t hurt anymore.

$ time pmerge -p @glsa

* Resolving...

Nothing to merge.

real 0m1.863s

user 0m1.463s

sys 0m0.100s

It differs from portage in that you call world as set explicitely — either via a command like pmerge -aus world or via pmerge -au @world.

pmaint on the other hand is my new overlay and tree synchronizer. Just call pmaint sync to sync all, or pmaint sync /usr/portage to sync only the given overlay (in this case the portage tree).

Using pix as replacement of eix isn’t yet perfect. You might hit some of the following:

pix always shows all packages in the tree and the overlays. The keywords are only valid for the highest version, though. marienz from #pkgcore on irc.freenode.net is working on fixing that.

If you only want to see the packages which you can install right away, just use pquery -nv. pix is intended to mimik eix as closely as possible, so I don’t have to change my habits ;) If it doesn’t fit your needs, just change the alias.

To search only in your installed packages, you can use pquery --vdb -nv.

Sometimes pquery might miss something in very broken overlay setups (like my very grown one). In that case, please report the error in the bugtracker [166] or at #pkgcore on irc.freenode.net:

23:27 <marienz> if they're reported on irc they're probably either

fixed pretty quickly or they're forgotten

23:27 <marienz> if they're reported in the tracker they're harder

to forget but it may take longer before they're

noticed

I hope my text helps you in changing your Gentoo system further towards the system which fits you best!

If the video doesn’t show, you can also download it as Ogg Theora & Vorbis “.ogv” [167] or find it on youtube [168].

This video shows the activity of the Hurd coders and answers some common questions about the Hurd, including “How stagnated is Hurd compared to Duke Nukem Forever?”. It is created directly from commits to Hurd repositories, processed by community codeswarm [170].

Every shimmering dot is a change to a file. These dots align around the coder who did the change. The questions and answers are quotes from todays IRC discussions (2010-07-13) in #hurd at irc.freenode.net.

You can clearly see the influx of developers in 2003/2004 and then again a strenthening of the development in 2008 with less participants but higher activity than 2003 (though a part of that change likely comes from the switch to git with generally more but smaller commits).

I hope you enjoyed the high-level look on the activity of the Hurd project [171]!

PS: The last part is only the information title with music to honor Sean Wright [172] for allowing everyone to use and adapt his Album Enchanted [173].

→ An answer to just accept it, truth hurds [174], where Flameeyes told his reasons for not liking the Hurd and asked for technical advantages (and claimed, that the Hurd does not offer a concept which got incorporated into other free software, contributing to other projects). Note: These are the points I see. Very likely there are more technical advantages which I don’t see well enough to explain them.

The translator system in the Hurd is a simple concept that makes many tasks easy, which are complex with Linux (like init, network transparency, new filesystems, …). Additionally there are capabilities (give programs only the access they need - adjusted at runtime), subhurds and (academic) memory management.

Information for potential testers: The Hurd is already usable, but it is not yet in production state. It progressed a lot during the recent years, though. Have a look at the status report [175] if you want to see if it’s already interesting for you. See running the Hurd [176] for testing it yourself.

Table of Contents:

Firstoff: FUSE [187] is essentially an implementation of parts of the translator system [188] (which is the main building block of the Hurd [171]) to Linux, and NetBSD recently got a port of the translators system of the Hurd [189]. That’s the main contribution to other projects that I see.

As an update in 2015: A pretty interesting development in the past few years is that the systemd developers have been bolting features onto Linux which the Hurd already provided 15 years ago. Examples: socket-activation provides on-demand startup like passive translators, but as crude hack piggybacked on dbus which can only be used by dbus-aware programs while passive translators can be used by any program which can access the filesystem, calling priviledged programs via systemd provides jailed priviledge escalation like adding capabilities at runtime, but as crude hack piggybacked on dbus and specialized services.

That means, there is a need for the features of the Hurd, but instead of just using the Hurd, where they are cleanly integrated, these features are bolted onto a system where they do not fit and suffer from bad performance due to requiring lots of unnecessary cruft to circumvent limitations of the base system. The clean solution would be to just set 2-3 full-time developers onto the task of resolving the last few blockers (mainly sound and USB) and then just using the Hurd.

On the bare technical side, the translator-based filesystem stands out: The filesystem allows for making arbitrary programs responsible for displaying a given node (which can also be a directory tree) and to start these programs on demand. To make them persistent over reboots, you only need to add them to the filesystem node (for which you need the right to change that node). Also you can start translators on any node without having to change the node itself, but then they are not persistent and only affect your view of the filesystem without affecting other users. These translators are called active, and you don’t need write permissions on a node to add them.

The filesystem implements stuff like Gnome VFS (gvfs) and KDE network transparency on the filesystem level, so those are available for all programs. And you can add a new filesystem as simple user, just as if you’d write into a file “instead of this node, show the filesystem you get by interpreting file X with filesystem Y” (this is what you actually do when setting a translator but not yet starting it (passive translator)).

One practical advantage of this is that the following works:

settrans -a ftp\: /hurd/hostmux /hurd/ftpfs /

dpkg -i ftp://ftp.gnu.org/path/to/*.deb

This installs all deb-packages in the folder path/to on the FTP server. The shell sees normal directories (beginning with the directory “ftp:”), so shell expressions just work.

You could even define a Gentoo mirror translator (settrans mirror\: /hurd/gentoo-mirror), so every program could just access mirror://gentoo/portage-2.2.0_alpha31.tar.bz2 and get the data from a mirror automatically: wget mirror://gentoo/portage-2.2.0_alpha31.tar.bz2

Or you could add a unionmount translator to root which makes writes happen at another place. Every user is able to make a readonly system readwrite by just specifying where the writes should go. But the writes only affect his view of the filesystem.

Starting a network process is done by a translator, too: The first time something accesses the network card, the network translator starts up and actually provides the device. This replaces most initscripts in the Hurd: Just add a translator to a node, and the service will persist over restarts.

It’s a surprisingly simple concept, which reduces the complexity of many basic tasks needed for desktop systems.

And at its most basic level, Hurd is a set of protocols for messages which allow using the filesystem to coordinate and connect processes (along with helper libraries to make that easy).

Also it adds POSIX compatibility to Mach while still providing access to the capabilities-based access rights underneath, if you need them: You can give a process permissions at runtime and take them away at will. For example you can start all programs without permission to use the network (or write to any file) and add the permissions when you need them.

Different from Linux, you do not need to start privileged and drop permissions you do not need (goverened by the program which is run), but you start as unprivileged process and add the permissions you need (governed by an external process):

groups # → root

addauth -p $(ps -L) -g mail

groups # → root mail

And then there are subhurds (essentially lightweight virtualization which allows cutting off processes from other processes without the overhead of creating a virtual machine for each process). But that’s an entire post of its own…

And the fact that a translator is just a simple standalone program means that these can be shared and tested much more easily, opening up completely new options for lowlevel hacking, because it massively lowers the barrier of entry.

For example the current Hurd can use the Linux network device drivers and run them in userspace (via DDE), so you can simply restart them and a crashing driver won’t bring down your system.

And then there is the possibility of subdividing memory management and using different microkernels (by porting the Hurd layer, as partly done in the NetBSD port), but that is purely academic right now (search for Viengoos to see what its about).

So in short:

The translator system in the Hurd is a simple concept that makes many tasks easy, which are complex with Linux (like init, network transparency, new filesystems, …). Additionally there are capabilities (give programs only the access they need - adjusted at runtime), subhurds and (academic) memory management.

Best wishes,

Arne

PS: I decided to read flameeyes’ post as “please give me technical reasons to dispell my emotional impression”.

PPS: If you liked this post, it would be cool if you’d flattr it:

[190]

[190]

PPPS: Additional information can be found in Gaël Le Mignot’s talk notes [191], in niches for the Hurd [192] and the GNU Hurd documentation pages [193].

P4S: This post is also available in the Hurd Staging Wiki [194].

AGPL [195] is a hack on copyright, so it has to use copyright, else it would not compile/run.

All the GPL [196] licenses are a hack on copyright. They insert a piece of legal code into copyright law to force it to turn around on itself.

You run that on the copyright system, and it gives you code which can’t be made unfree.

To be able to do that, it has to be written in copyright language (else it could not be interpreted).

my_code = "<your code>"

def AGPL ( code ):

"""

>>> is_free ( AGPL ( code ) )

True

"""

return eval (

transform_to_free ( code ) )

copyright ( AGPL ( my_code ) )

You pass “AGPL ( code )” to the copyright system, and it ensures the freedom of the code.

The transformation means that I am allowed to change your code, as long as I keep the transformation, because copyright law sees only the version transformed by AGPL, and that stays valid.

Naturally both AGPL definition and the code transformed to free © must be ©-compatible. And that means: All rights reserved. Else I could go in and say: I just redefine AGPL and make your code unfree without ever touching the code itself (which is initially owned by you by the laws of ©):

def AGPL ( code ):

"""

>>> is_free ( AGPL ( code ) )

False

"""

return eval (

transform_to_mine ( code ) )

In this Python-powered copyright-system, I could just define this after your definition but before your call to copyright(), and all calls to APGL ( code ) would suddenly return code owned by me.

Or you would have to include another way of defining which exact AGPL you mean. Something like “AGPL, but only the versions with the sha1 hashes AAAA BBBB and AABA”. cc tries to use links for that, but what do you do if someone changes the DNS resolution to point creativecommons.org to allmine.com? Whose DNS server is right, then - legally speaking?

In short: AGPL is a hack on copyright, so it has to use copyright, else it would not compile/run.

→ An answer I wrote to this question on Quora [197].

Software Engineering: What is the truth of 10x programmers?

Do they really exist?…

Let’s answer the other way round: I once had to take heavy anti-histamines for three weeks. My mind was horribly hazy from that, and I felt awake only about two hours per day. However I spent every day working on a matrix multiplication problem.

It was three weeks of failure, because I just could not grasp the problem. I was unable to hold it in my mind.

Then I could finally drop the anti-histamine.

On the first day I solved the problem on my way to buy groceries. On the second day I planned the implementation while walking for two hours . On the third day I finished the program.

This taught me to accept it when people don’t manage to understand things I understand: I know that the brain can actually have different paces and that complexity which feels easy to me might feel infeasible for others. It sure did feel that way to me while I took the anti-histamines.

It also taught me to be humble: There might be people to whom my current state of mind feels like taking anti-histamines felt to me. I won’t be able to even grasp the patterns they see, because they can use another level of complexity.

To get a grasp of the impact, I ask myself a question: How would an alien solve problems who can easily keep 100 things in its mind — instead of the 4 to 7 which is the rough limit for humans [198]?

This is the biggest news item [199] for free culture and free software in the past 5 years: The creativecommons attribution sharealike license is now one-way compatible to the GPL — see the message from creativecommons [200] and from the Free Software Foundation [201].

Some license compatibility legalese might sound small, but the impact of this is hard to overestimate.

(I’ll now revise some of my texts about licensing — CC BY-SA got a major boost in utility because it not longer excludes usage in copyleft documents which need the source to have a defended sharealike clause)

You have an awesome project, but you see people reach for inferior tools? There are people using your project, but you can’t reach the ones you care about? Read on for a way to ensure that your communication doesn’t ruin your prospects but instead helps your project to shine.

Communicating your project is an essential step for getting the users you want. Here I summarize my experience from working on several different projects including KDE [208] (where I learned the basics of PR - yay, sebas!), the Hurd [171] (where I could really make a difference by improving the frontpage and writing the Month of the Hurd), Mercurial [209] (where I practiced minimally invasive PR) and 1d6 [207] (my own free RPG where I see how much harder it is to do PR, if the project to communicate is your own).

Since voicing the claim that marketing is important often leads to discussions with people who hate marketing of any kind, I added an appendix [210] with an example which illustrates nicely what happens when you don’t do any PR - and what happens if you do PR of the wrong kind.

If you’re pressed for time and want the really short form, just jump to the questionnaire [211].

Before we jump directly to the guide, there is an important term to define: Good marketing. That is the kind of marketing, we want to do.

The definition I use here is this:

Good marketing ensures that the people to whom a project would be useful learn about the project.

and

Good marketing starts with the existing strengths of a project and finds people to whom these strengths are useful.

Thus good marketing does not try to interfere with the greater plan of the project, though it might identify some points where a little effort can make the project much more interesting to users. Instead it finds users to whom the project as it is can be useful - and ensures that these know about the project.

Be fair to competitors, be honest to users, put the project goals before generic marketing considerations.

As such, good marketing is an interface between the project and its (potential) users.

This guide depends on one condition: Your project already has at least one area in which it excels over other projects. If that isn’t the case, please start by making your project useful to at least some people.

The basic way for communicating your project to its potential users always follows the same steps.

To make this text easier to follow, I’ll intersperse it with examples from the latest project where I did this analysis: GNU Guile: The GNU Ubiquitous Intelligent Language for Extensions [221]. Guile provides a nice example, because its mission is clearly established in its name and it has lots of backing, but up until our discussion actually had a wikipedia-page which was unappealing to the point of being hostile against Guile itself.

To improve the communication of our project, we first identify our target groups.

To do so, we begin by asking ourselves, who would profit from our project:

Try to find about 3 groups of people and give them names which identify them. Those are the people we must reach to grow on the short term.

In the next step, we ask ourselves, whom we want or need as users to fullfill our mission (our long-term goal):

Again try to find about 3 groups of people and give them names which identify them. Those are the people we must reach to achieve our longterm goal. If while writing this down you find that one of the already identified groups which we could reach would actually detract us from our goal, mark them. If they aren’t direly needed, we would do best to avoid targeting them in our communication, because they will hinder us in our longterm progress: They could become a liability which we cannot get rid of again.

Now we have about 6 target groups: Those are the people who should know about our project, either because they would benefit from it for pursuing their goals, or because we need to reach them to achieve our own goals. We now need to find out, which kind of information they actually need or search.

GNU Guile is called The GNU Ubiquitous Intelligent Language for Extensions [221]. So its mission is clear: Guile wants to become the de-facto standard language for extending programs - at least within the GNU project.

This part just requires thinking ourselves into the role of each of the target groups. For each of the target groups, ask yourself:

What would you want to know, if you were to read about our project?

As result of this step, we have a set of answers. Judge them on their strengths: Would these answers make you want to invest time to test our project? If not, can we find a better answer?

If our answers for a given group are not yet strong enough, we cannot yet communicate our project convincingly to them. In that case it is best to postpone reaching out to that group, otherwise they could get a lasting weak image of our project which would make it harder to reach them when we have stronger answers at some point in the future.

Remove all groups whose wishes we cannot yet fullfill, or for whom we do not see ourselves as the best choice.

Now we have answers for the target groups. When we now talk or write about our project, we should keep those target groups in mind.

You can make that arbitrarily complex, for example by trying to find out which of our target groups use which medium. But lets keep it simple:

Ensure that our website (and potentially existing wikipedia page) includes the information which matters to our target groups. Just take all the answers for all the target groups we can already reach and check whether the basic information contained in them is given on the front page of our website.

And if not, find ways to add it.

As next steps, we can make sure that the questions we found for the target groups not only get answered, but directly lead the target groups to actions: For example to start using our project.

For Guile, we used this analysis to fix the Wikipedia-Page. The old-version [227] mainly talked about history and weaknesses (to the point of sounding hostile towards Guile), and aside from the latest release number, it was horribly outdated. And it did not provide the information our target groups required.

The current Wikipedia-Page of GNU Guile [228] works much better - for the project as well as for the readers of the page. Just compare them directly and you’ll see quite a difference. But aside from sounding nicer, the new site also addresses the questions of our target groups. To check that, we now ask: Did we include information for all the potential user-groups?

So there you go: Not perfect, but most of the groups are covered. And this also ensures that the Wikipedia-page is more interesting to its readers: A clear win-win.

Additional points which we should keep in mind:

For whom are we already useful or interesting? Name them as Target-Groups.

Whom do we want as users on the long run? Name them as Target-Groups.

Use bab-com to avoid bad-com ☺ - yes, I know this phrase is horrible, but it is catchy and that fits this article: you need catchy things

The mission statement is a short paragraph in which a project defines its goal.

A good example is:

Our mission is to create a general-purpose kernel suitable for the GNU operating system, which is viable for everyday use, and gives users and programs as much control over their computing environment as possible. → GNU Hurd mission explained [229]

Another example again comes from Guile:

Guile was conceived by the GNU Project following the fantastic success of Emacs Lisp as an extension language within Emacs. Just as Emacs Lisp allowed complete and unanticipated applications to be written within the Emacs environment, the idea was that Guile should do the same for other GNU Project applications. This remains true today. → Guile and the GNU project [230]

Closely tied to the mission statement is the slogan: A catch-phrase which helps anchoring the gist of your project in your readers mind. Guile does not have that, yet, but judging from its strengths, the following could work quite well for Guile 2.0 - though it falls short of Guile in general:

GNU Guile scripting: Use Guile Scheme, reuse anything.

We saw why it is essential to communicate the project to the outside, and we discussed a simple structure to check whether our way of communication actually fits our projects strengths and goals.

Finding the communication strategy actually boils down to 3 steps:

Also a clear mission statement, slogan and project description help to make the project more tangible for readers. In this context, good marketing means to ensure that the right people learn about the real strengths of the project.

With that I’ll conclude this guide. Have fun and happy hacking!

— Arne Babenhauserheide

In free software we often think that quality is a guarantee for success. But in just the 10 years I’ve been using free software nowadays, I saw my share of technically great projects succumbing to inferior projects which simply reached more people and used that to build a dynamic which greatly outpaced the technically better product.

One example for that are pkgcore and paludis. When portage, the package manager of Gentoo, grew too slow because it did ever more extensive tests, two teams set out to build a replacement.

One of the teams decided that the fault of the low performance lay in Python, the language used by portage. That team built a package manager in C++ and had --wonderfully-long-command-options without shortcuts (have fun typing), and you actually had to run it twice: Once to see what would get installed and then again to actually install it (while portage had had an --ask option for ages, with -a as shortcut). And it forgot all the work it had done in the previous run, so you could wait twice as long for the result. They also had wonderful latin names, and they managed the feat of being even slower than portage, despite being written in C++. So their claim that C++ would be magically faster than python was simply wrong (because they skipped analyzing the real performance bottlenecks). They called their program paludis.

Note: Nowadays paludis has a completely new commandline interface which actually supports short command options. That interface is called cave and looks sane.